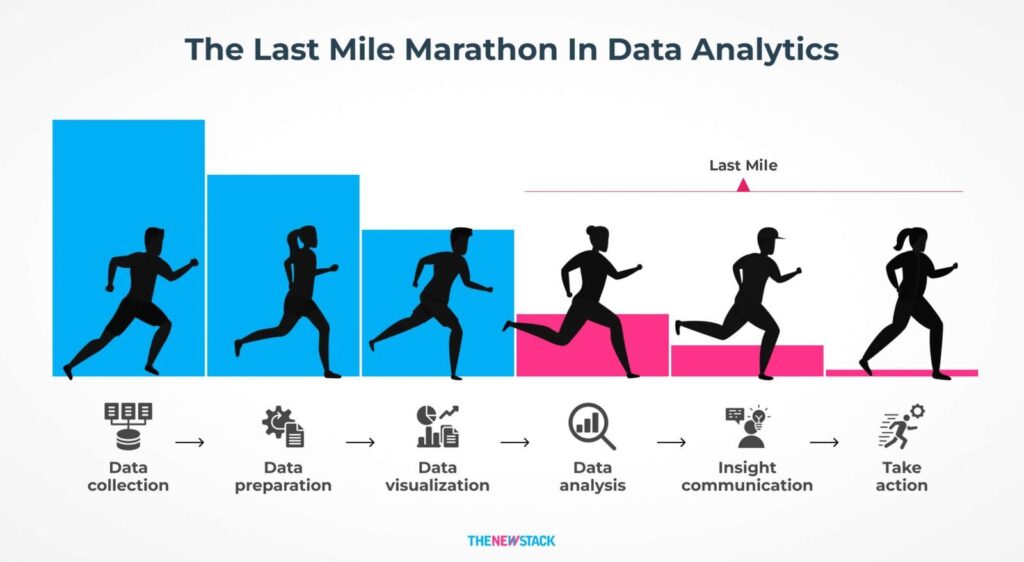

Retrieval Augmented Generation (RAG) — An Introduction

The model hallucinated! It was giving me OK answers and then it just started hallucinating. We’ve all heard or experienced it. Natural Language Generation models can sometimes hallucinate, i.e., they start generating text that is not quite accurate for the prompt provided. In layman’s terms, they start making stuff up that’s not strictly related to […]

Retrieval Augmented Generation (RAG) — An Introduction Read More »