A Simple Implementation of the Attention Mechanism from Scratch

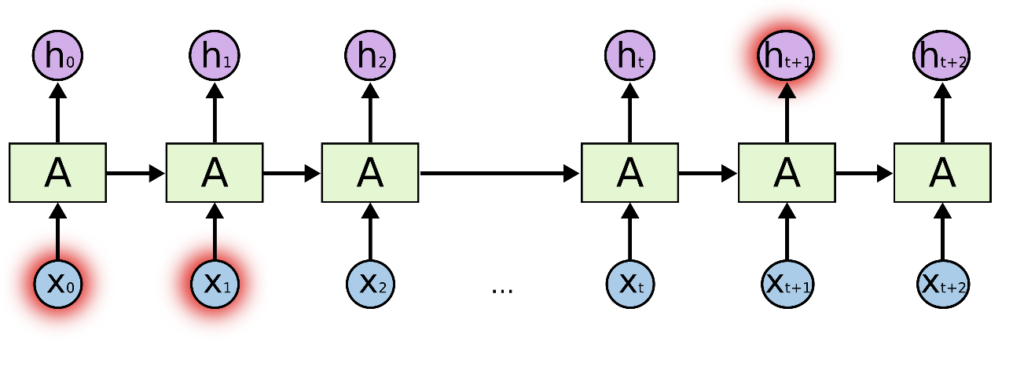

Introduction The Attention Mechanism is often associated with the transformer architecture, but it was already used in RNNs. In Machine Translation or MT (e.g., English-Italian) tasks, when you want to predict the next Italian word, you need your model to focus, or pay attention, on the most important English words that are useful to make […]

A Simple Implementation of the Attention Mechanism from Scratch Read More »